Tao Xie | North Carolina State University | xie@csc.ncsu.edu

Ahmed E. Hassan | University of Victoria | ahmed@uvic.ca

Part I

Mining Software Engineering Data goals:

- Transform static record-keeping SE data to active data.

- Make SE data actionable by uncovering hidden patterns and trends.

Uses of mining SE data:

- Gain empirically-based understanding of software development.

- Predict, plan, and understand various aspects of a project.

- Support future development and project management activities.

Types of SE Data:

- Historical data

Used primarily for record-keeping activities (checking the status of a bug, retrieving old code)

- Version or source control:

- cvs, subversion, perforce.

- Store changes to the data

- Bug systems:

- bugzilla, GNATS, JIRA.

- Follow the resolution of defects.

- Mailing lists:

- Mbox

- Record rationale for decisions throughout the life of a project.

- Multi-run and Multi-site data

- Execution traces

- Deployment logs

Software Maintenance Activities

- Perfective: add new functionality

- Corrective: fix faults

- Adaptive: new file formats, refactoring

- File

- Function

- Dependency (e.g. Call )

Source Control Repositories

A source control system tracks changes to ChangeUnits.

Examples of ChangeUnits:

For each ChangeUnit, it tracks the developer, time, change message, co-changing Units.

- High precision to avoid wasting time

- High recall to avoid bugs

- Recall as high as 44%

- Precision around 30%

- Previous bugs are good predictor of future bugs.

- The more a file changes, the more likely it will have bugs in it.

- Recent changes affect more the bug potential of a file over older changes (weighted time damp models)

- Number of developers is of little help in predicting bugs.

- Hard to generalize bug predictors across projects unless in similar domains.

- 71% of files that import compiler packages, had to be fixed later on.

- 14% of all files that import ui packages, had to be fixed later on.

- Recall/Precision in 50-60% range.

- Strongly correlates with code change activity.

- Moderately correlates with document change activity.

- Expertise vs. interest

- RTC : Review-then-Commit

- CTR : Commit-then-Review

- An API may serve multiple functionalities --> Different styles of API usage

- Can we synthesize jungloid code fragments automatically?

- Given a simple query describing the desired code in terms of input and output types, return a code segement.

- Mine framework reuse patterns

- Membership relationships

- A class contains membership functions

- Reuse relationships

- Class inheritance / instantiation

- Function invocations / overriding

- Membership relationships

- Mine software plagiarism

- Program dependence graphs

- A copy-paste segment typically does not have big gaps.

- Use a maximum gap threshold to control.

- Output the instances of patterns ( i.e., the copy-pasted code segments) instead of the patterns.

- Use small copy-pasted segments to form larger ones.

- Prune false positives: tiny segments, unmappable segments, overlapping segments, and segments with large gaps.

- Identifier a in segment S1 is changed to b in segment S3 3 times, but remains unchanged once - likely a bug

- The heuristics may not be correct all the time

- Training dataset: tuples for model construction

- Each tuples/sample belongs to a predefined class

- Classification rules, decision trees, or math formulae

- Estimate accuracy of the model using an independent test set.

- Acceptable accuracy --> apply the model to classify tuples with known class labels.

- Supervision: objects in the training data set have labels

- New data is classified based on the training set

- The class labels of training data are unknown

- Given a set of measurements, observations, etc. with the aim of establishing the existence of classes or clusters in the data.

- Positive samples: past bugs

- Negative samples: not bug reports

- Consider the k closest cases reported before

- Compare sum 1/d for bug cases and not-bug cases, where d is the similarity between the current state and the reported states.

- If the current state is more similar to bugs, predict a bug.

- Use Latent Semantic Analysis (LSA) to find similarity between software systems.

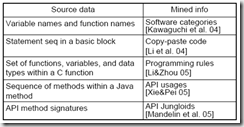

- Use identifiers (e.g., variable names, function names) as features

- "gtk_window" represents some window

- The source code near "gtk_window" contains some GUI operation on the window.

- Extracting categories using frequent identifiers

- "gtk_window", "gtk_main", and "gpointer" --> GTK related software system

- Use LSA to find relationships between identifiers

Change Propagation

Measuring Change Propagation

We want:

Guiding Change Propagation

Mine association rules from change history.

Use rules to help propagate changes:

High precision and recall reached in < 1mth

Prediction accuracy improves prior to a release (i.e., during maintenance phase)

Code Sticky Notes

Traditional dependency graphs and program understanding models usually do not use historical information.

Static dependencies capture only a static view of a system - not enough detail!

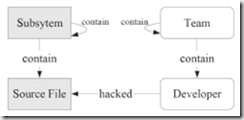

Development history can help understand the current structure (architecture) of a software system.

Studying Conway's Law

"The structure of a software system is a direct reflection of the structure of the development team"

Predicting Bugs

Studies have shown that most complexity metrics correlate well with LOC ! (Lines of Code)

Noteworthy findings:

Example 1 : using imports in Eclipse to predict bugs

Example 2 : don't program on fridays

Percentage of bug-introducing changes for eclipse, most high in Friday.

Classifying changes as Buggy or Clean

Given a change can we warn a developer that there is a bug in it ?

Project Communication - Mailing lists

Most open source projects communicate through mailing lists or IRC channels.

Rich source of information about the inner workings of large projects.

Discussion cover topics such as future plans, design decision, project policies, code or path reviews.

Social network analysis could be performed on discussion threads.

Social Network Analysis

Mail list activity

Social network measures (in-degree, out-degree, between's) indicate that committers play much more significant roles in the mailing list community that non-committers.

Immigration rate of developers

When will a developer be invited to join a project?

The patch review process

Two review styles

80% patches reviewed within 3.5 days and 50% reviewed in < 19 hrs

Measure a team's morale around release time

Study the content of messages before and after release.

Use dimensions from a psychometric text analysis tool.

Program Source Code

Code Entities

Mining API Usage Patterns

How should an API be used correctly?

"I know what type of object I need, but I don't know how to write the code to get the object"

"I know what method call I need, but I don't know how to write code before and after this method call"

Relationships between Code Entities

Program Execution Traces

Method-Entry/Exit States

Goal: Mine specifications (pre/post conditions) or object behavior (object transition diagrams)

State of an object: values of transitively reachable fields.

Method-entry state: Receiver-object state, method argument values.

Method-exit state: Receiver-object state, updated method argument values, method return value.

Other Profiled program states

Goal: detect or locate bugs.

Values of variables at certain code locations

Object/static field read/write

Method-call arguments

Method returns

Sampled predictions on values of variables

Executed Structural Entities

Goal: Locate bugs.

Executed branches/paths, def-use pairs.

Executed function/method calls.

Group methods invoked on the same object

Profiling options

Execution hit vs. count

Execution order (sequences)

Part II

How can you mine Software Engineering data?

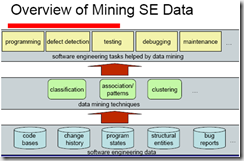

Overview of data mining techniques

Association rules and frequent patterns

Classification

Clustering

Misc.

Association Rules

Example:

Finding highly correlated method call pairs.

Check the revisions (fixes to bugs), find the pairs of method calls whose confidences have improved dramatically by frequent added fixes.

Those are the matching method call pairs that may often be violated by programmers

Conflicting Patterns

999 out of 1000 times spin_lock is followed by spin_unlock

The single time that spin_unlock does not follow may likely be an error.

We can detect an error without knowing the correctness rules.

Detect Copy-Paste Code

Apply closed sequential pattern mining techniques.

Customizing the techniques:

Find Bugs in Copy-Pasted Segments

For two copy-pasted segments, are the modifications consistent?

The lower the unchanged rate of an identifier, the more likely there is a bug.

Mining Rules in Traces

Mining association rules or sequential patterns S --> F, where S is a statement and F is the status of program failure.

The higher the confidence, the more likely S is faulty or related to a fault.

Using only one statement at the left side of the rule can be misleading, since a fault may led by a combination of statements.

Frequent patterns can be used to improve.

Mining Emerging Patterns in Traces

A method executed only in failing runs is likely to point to the defect.

Comparing the coverage of passing and failing program runs helps.

Mining patterns frequent in failing program runs but infrequent in passing program runs.

Sequential patterns may be used.

Classification

Classification: A 2-step Process

Model construction: describe a set of predetermined classes

Model application: classify unseen objects

Supervised learning (Classification)

Unsupervised learning (Clustering)

GUI-Application Stabilizer

Given a program state S and an event e, predict whether e likely results in a bug

A K-NN based approach

Clustering

What is clustering ==> group data into clusters.

Similar to one another within the same cluster.

Dissimilar to the objects in other clusters.

Unsupervised learning: no predefined classes.

Clustering and Categorization

Software categorization

Partitioning software systems into categories

Categories predefined - a classification problem

Categories discovered automatically - a clustering problem

Software Categorization - MUDABlue

Understanding source code

Other Mining Techniques

Automation/grammar/regular expression learning

Searching/matching

Concept analysis

Template-based analysis

Abstraction-based analysis